In containerized application development, creating efficient and accurate Dockerfiles is essential for deployment consistency and scalability. As Large Language Models (LLMs) advance, their potential for automating Dockerfile generation becomes an interesting area of study.

During the development of an AI-assisted debugging tool for pipelines, we encountered varying results when using different LLMs to generate Dockerfiles. This prompted a more systematic investigation into their performance, with a particular focus on comparing more resource-intensive models against lighter alternatives like GPT-4o-mini.

Question: Which LLM is best for generating Dockerfiles, and which should you choose, the biggest and smartest or the most cost-effective?

This analysis examines our methodology, findings from investigating this question, and the practical implications for developers and DevOps professionals. We aim to provide an objective assessment of how various LLMs perform in Dockerfile generation tasks, considering factors such as accuracy, efficiency, and resource requirements.

Why Dockerfiles Matter

Dockerfiles are the blueprint for containerized applications, defining the environment, dependencies, and configuration needed to run your code consistently across different systems. Getting these right is critical for several reasons:

Consistency: Ensures your application behaves the same way in development, testing, and production environments.

Efficiency: Well-crafted Dockerfiles lead to optimized container images, reducing build times and resource usage.

Scalability: Properly containerized applications can be easily scaled horizontally to meet demand.

Portability: Containers can run on any system that supports Docker, simplifying deployment across different infrastructures.

However, creating optimal Dockerfiles can be challenging, especially for complex applications or those unfamiliar with containerization best practices. This is where AI-assisted generation could potentially streamline the process.

Our Testing Methodology

To evaluate the effectiveness of different LLMs in generating Dockerfiles, we developed a systematic approach:

- Project Selection

We chose 10 diverse projects representing various complexities and tech stacks, from simple web applications to complex ML pipelines.

- Custom CLI Tool

We created docker-generate, a tool that provides a consistent interface between project structures and AI models.

- Context Gathering

The tool extracts relevant information from project files (e.g., requirements.txt, README.md) to provide context to the LLMs.

- Model Testing

We tested three models: GPT-4o, GPT-4o-mini, and Claude 3.5 Sonnet.

- Evaluation Criteria

- Build Success Rate: Percentage of Dockerfiles that successfully built.

- Run Success Rate: Percentage of containers that ran without errors.

- First Attempt Accuracy: Success rate without needing iterations.

- Multi-Container Support: Ability to handle complex, multi-service projects.

- Iterative Testing

For failed attempts, we provided error messages back to the LLMs and allowed a retry.

Our test data and the results

We selected and created 10 diverse projects representing various project types and complexities:

| ID | Project & Description | Framework | Dockerfile Complexity |

|---|---|---|---|

| 1 | Ploomber AI Debugger | Streamlit | Easy: Port exposure, env variables |

| 2 | Streamlit with a Database | psycopg2 | Easy: Requires gcc for package building |

| 3 | JAN: LLM local inference | LLM framework | High: GPU config, full dev environment |

| 4 | Recommendation System | Streamlit + Surprise | Medium: gcc for package building |

| 5 | Simple React Webapp | React | Low: Basic Node.js setup |

| 6 | Gaussian Blur Image Processor | Dash + OpenCV | Medium: OpenCV dependencies |

| 7 | Image Classifier: CPU vs GPU inference | Streamlit + PyTorch | High: CUDA access configuration |

| 8 | Microservices: Web server, queue, worker | Flask + Redis + Celery | Medium: Multiple Dockerfiles needed with a missing python package |

| 9 | ETL Data Pipeline | Airflow + PostgreSQL | High: System deps, DB setup, Airflow config |

| 10 | ML Model Serving via API | FastAPI + MLflow | High: Model versioning, environment reproduction |

Each LLM generates Dockerfiles based on minimal project information. For complex scenarios (e.g., GPU access, multi-container setups), additional context is provided to ensure fair comparison.

Specific Scenario Notes

- SQL App: An

init.sqland Dockerfile for the database is in adb_setup/folder (not given to LLM). - Image Classifier: Prompt includes “Create a Dockerfile with CUDA GPU access. Use Python 3.10”.

- Microservices: LLM needs to generate two Dockerfiles, for the web and worker services. A

docker-compose.ymlis provided but Werkzeug is missing from dependencies. - Data Pipeline & ML Model Serving: Additional prompts cover service setup, database connections, environment variables, and volume persistence.

LLM Performance Analysis: Dockerfile Generation

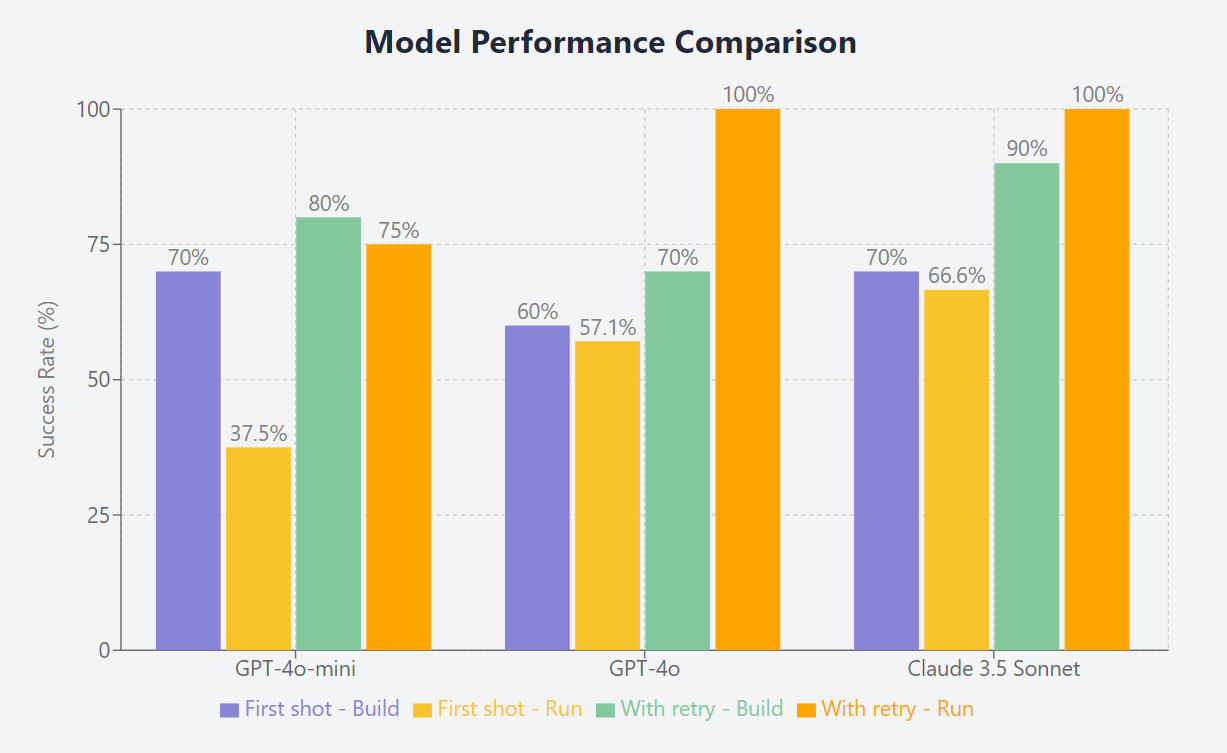

Model Comparison: Visual Overview

Dockerfile Build Success Rates

| Model | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Build Success Rate |

|---|---|---|---|---|---|---|---|---|---|---|---|

| GPT-4o-mini | ✅ | ✅ | ❌ | ❌ | ✅ | ✅ | ✅ | ✅ | ❌ | ✅ | 70% |

| GPT-4o-mini with retry | ✅ | ❌ | ❌ | 80% | |||||||

| GPT-4o | ✅ | ✅ | ❌ | ❌ | ✅ | ✅ | ❌ | ✅ | ❌ | ✅ | 60% |

| GPT-4o with retry | ❌ | ✅ | ❌ | ❌ | 70% | ||||||

| Sonnet | ✅ | ✅ | ❌ | ❌ | ✅ | ✅ | ✅ | ✅ | ❌ | ✅ | 70% |

| Sonnet with retry | ❌ | ✅ | ✅ | 90% |

Container Runtime Success Rates

| Model | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Run Success Rate |

|---|---|---|---|---|---|---|---|---|---|---|---|

| GPT-4o-mini | ❌ | ❌ | ❌ | - | ✅ | ✅ | ✅ | ❌ | - | ❌ | 37.5% |

| GPT-4o-mini with retry | ✅ | ✅ | ❌ | ❌ | ✅ | 75% | |||||

| GPT-4o | ❌ | ✅ | - | ✅ | ✅ | ✅ | - | ❌ | - | ❌ | 57.1% |

| GPT-4o with retry | ✅ | ✅ | ✅ | 100% | |||||||

| Sonnet | ❌ | ✅ | - | ✅ | ✅ | ✅ | ✅ | ❌ | ✅ | ❌ | 66.6% |

| Sonnet with retry | ✅ | ✅ | ✅ | 100% |

Key Findings

Main Observations:

- Overall Performance:

- Claude 3.5 Sonnet showed the highest overall accuracy and adaptability.

- GPT-4o demonstrated creative problem-solving but occasionally struggled with specific technical requirements.

- GPT-4o-mini performed surprisingly well, offering a good balance of accuracy, efficiency and cost-efficiency. It stands out as the value leader, especially when leveraging iterative refinement.

- Improvement with Iteration:

- All models showed significant improvement when given a chance to refine their output based on error messages. This highlights the importance of iterative development in AI-assisted coding.

- Handling Complex Scenarios:

- Full development environments and CUDA setups proved challenging for all models.

- Dependency management in multi-container setups was a differentiator, with Claude 3.5 Sonnet showing superior performance.

- Breakdown:

- Experiment 3 (Full Dev Environment): Proved challenging for all models.

- Experiment 7 (CUDA Setup): GPT-4o struggled with finding appropriate CUDA-enabled base images.

- Experiment 8 (Dependency Management):

- GPT-4o-mini couldn’t resolve dependency issues.

- GPT-4o requested user intervention.

- Claude 3.5 Sonnet proactively added missing dependencies.

- Specific Challenges:

- OpenAI models struggled with Airflow permissions in Docker Compose setups.

- All models tended to default to outdated versions of certain tools (e.g., MLFlow), indicating a need for more up-to-date training data.

- Retry Effectiveness: All models showed significant improvement when given a second chance, highlighting the importance of iterative refinement.

Implications for Developers

- AI is a really good assistant, but not a replacement: While LLMs show promise in generating Dockerfiles, they’re best used as a starting point or assistant rather than a complete replacement for human expertise.

- Importance of Review and Testing: Always review and test AI-generated Dockerfiles before using them in production. The models can make mistakes or use outdated practices.

- Iterative Approach: Using AI in an iterative manner, where you provide feedback and allow for refinement, yields the best results.

- Model Selection: Consider your specific needs when choosing an LLM. If you’re working on complex, multi-container setups, a more advanced model like Claude 3.5 Sonnet might be worth the additional cost. For simpler projects, GPT-4o-mini could offer a good balance of performance and efficiency.

Conclusion

AI-assisted Dockerfile generation shows promise in streamlining the containerization process, especially for developers new to Docker or working on complex projects. However, it’s not a silver bullet. The technology works best when combined with human oversight and domain expertise.

As LLMs continue to evolve and be trained on more up-to-date information, we can expect their performance in specialized tasks like Dockerfile generation to improve. For now, they serve as a valuable tool in the developer’s toolkit, capable of providing a solid starting point and assisting with troubleshooting.

Try It Yourself

If you’re interested in experimenting with AI-generated Dockerfiles, try it now dockerfile.ploomber.app!