Introduction

The Myers-Briggs Type Indicator (MBTI) is a popular psychological assessment tool that categorizes individuals into one of 16 personality types based on preferences in how they perceive the world and make decisions. These categories are crafted from a matrix of binary choices: Introversion (I) or Extraversion (E), Sensing (S) or Intuition (N), Thinking (T) or Feeling (F), and Judging (J) or Perceiving (P).

One approach we can take is through a dichotomy map. For example, if you are an introvert, you are not an extrovert. If you are a thinker, you are not a feeler. If you are a judger, you are not a perceiver. This approach is simple and intuitive, but it is also limiting. It does not account for the fact that people can be both introverted and extroverted, or that they can be both thinkers and feelers.

However, with advancements in natural language processing, could an AI model such as a large language model (LLM) deduce your personality type from your responses, in a manner akin to a human interpreter or handle ambiguity or ambivert characteristics? This is the question we set out to explore through a full stack application built using OpenAI’s GPT-4 model as our LLM, Haystack by deepset for managing the backend logic and data flow, Solara for the frontend interface, and Ploomber Cloud to deploy an application we can share with others.

About the application we developed

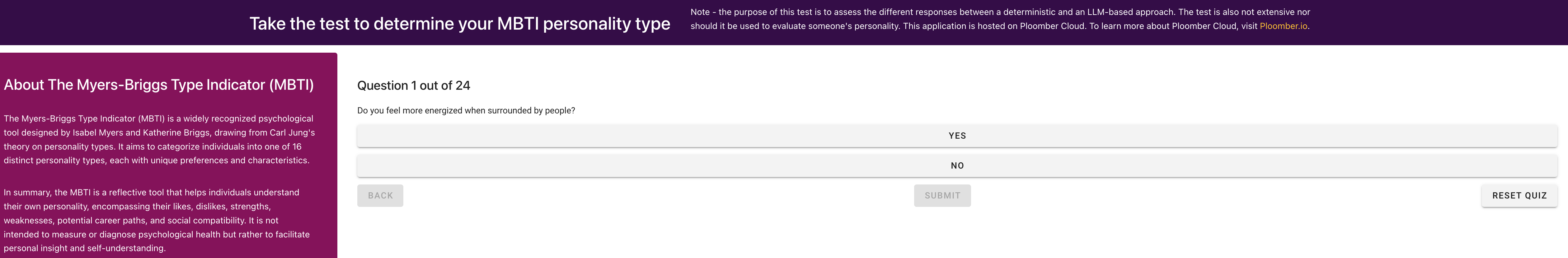

The application we developed functions by presenting the user with a series of questions that correspond to the dichotomies in the MBTI. For example, “Do you feel more energized when surrounded by people?” would be a question determining the Extraversion or Introversion trait. The user responds with a simple “Yes” or “No”, and these responses are collected and stored with their corresponding trait labels. The trait labels are as follows:

- Extraversion (E) or Introversion (I)

- Sensing (S) or Intuition (N)

- Thinking (T) or Feeling (F)

- Judging (J) or Perceiving (P)

We followed two methodologies: a dichotomy approach, and an LLM-powered approach, where the LLM is given the questions, the answers and is instructed to determine the personality type based on the answers to the questions.

About our application

For this project, we used the Haystack framework to manage the backend logic and data flow, and Solara for the frontend interface. We used Ploomber Cloud to deploy an application we can share with others.

Within the backend, we implemented two methodologies: a dichotomy approach, and an LLM-powered approach, where the LLM is given the questions, the answers and is instructed to determine the personality type based on the answers to the questions.

Within the frontend, we implemented a simple interface that allows the user to answer the questions and see the results of the dichotomy approach and the LLM-powered approach.

Try the application on Ploomber Cloud

A live version of the application is available at https://ploomber.cloud/p/mbti.

A complete code implementation can also be found in our open source repository

Questions asked

A total of 24 yes/no questions are asked, 6 for each of the 4 dichotomies. For each dichotomy, 3 questions are asked for each of the two traits. The questions are as follows:

Start

|--- Extraversion (E) – Introversion (I)

| |--- Do you feel more energized when surrounded by people? (E)

| |--- Do you often find solitude more refreshing than social gatherings? (I)

| |--- When faced with a problem, do you prefer discussing it with others? (E)

| |--- Do you tend to process your thoughts internally before you speak? (I)

| |--- At parties, do you initiate conversations with new people? (E)

| |--- Do you prefer spending weekends quietly at home rather than going out? (I)

|

|--- Sensing (S) – Intuition (N)

| |--- Do you focus more on the details and facts of your immediate surroundings? (S)

| |--- Are you more interested in exploring abstract theories and future possibilities? (N)

| |--- In learning something new, do you prefer hands-on experience over theory? (S)

| |--- Do you often think about how actions today will affect the future? (N)

| |--- When planning a vacation, do you prefer having a detailed itinerary? (S)

| |--- Do you enjoy discussing symbolic or metaphorical interpretations of a story? (N)

|

|--- Thinking (T) – Feeling (F)

| |--- When making decisions, do you prioritize logic over personal considerations? (T)

| |--- Are your decisions often influenced by how they will affect others emotionally? (F)

| |--- In arguments, do you focus more on being rational than on people's feelings? (T)

| |--- Do you strive to maintain harmony in group settings, even if it means compromising? (F)

| |--- Do you often rely on objective criteria to assess situations? (T)

| |--- When a friend is upset, is your first instinct to offer emotional support rather than solutions? (F)

|

|--- Judging (J) – Perceiving (P)

|--- Do you prefer to have a clear plan and dislike unexpected changes? (J)

|--- Are you comfortable adapting to new situations as they happen? (P)

|--- Do you set and stick to deadlines easily? (J)

|--- Do you enjoy being spontaneous and keeping your options open? (P)

|--- Do you find satisfaction in completing tasks and finalizing decisions? (J)

|--- Do you prefer exploring various options before making a decision? (P)

Simpler Approach: dichotomy map with Python

Using a dichotomy map, we can map our questions to the dichotomies in the MBTI. For example, the question “Do you feel more energized when surrounded by people?” would be a question determining the Extraversion or Introversion trait. The user responds with a simple “Yes” or “No”, and these responses are collected and stored with their corresponding trait labels. Below is a dichotomy map for our 24 questions:

dichotomy_map = {

'E/I': [1, 0, 1, 0, 1, 0],

'S/N': [1, 0, 1, 0, 1, 0],

'T/F': [1, 0, 1, 0, 1, 0],

'J/P': [1, 0, 1, 0, 1, 0]

}

We can initialize the scores for each trait in a dictionary:

scores = {

'E': 0,

'I': 0,

'S': 0,

'N': 0,

'T': 0,

'F': 0,

'J': 0,

'P': 0

}

We can capture the responses to the 24 questions and then iterate over the responses. For each response:

- If the response is “Yes”, it selects the first trait of the dichotomy (e.g., ‘E’ for ‘E/I’).

- If the response is “No”, it selects the second trait of the dichotomy (e.g., ‘I’ for ‘E/I’).

The score for the selected trait is then increased by the corresponding value from dichotomy_map for that question. Since the dichotomy_map uses a repeating pattern (e.g., [1, 0, 1, 0, 1, 0]), the modulo operator (%) is used to cycle through this pattern based on the question index.

# Iterate through the responses and update scores

for i, response in enumerate(responses):

if i < 6: # E/I questions

trait = 'E' if response == 'Yes' else 'I'

scores[trait] += dichotomy_map['E/I'][i % 6]

elif i < 12: # S/N questions

trait = 'S' if response == 'Yes' else 'N'

scores[trait] += dichotomy_map['S/N'][i % 6]

elif i < 18: # T/F questions

trait = 'T' if response == 'Yes' else 'F'

scores[trait] += dichotomy_map['T/F'][i % 6]

else: # J/P questions

trait = 'J' if response == 'Yes' else 'P'

scores[trait] += dichotomy_map['J/P'][i % 6]

The result of this process is a tally of scores for each of the eight MBTI traits. This scoring mechanism is essential for determining the user’s MBTI type based on their responses to the quiz questions. We can use the following code to compute the final MBTI personality type:

def calculate_mbti_scores(responses):

"""

This function takes responses and calculates the MBTI scores.

Parameters

----------

responses : list

A list of responses to the MBTI questions. Each response is of type dict

and has the following keys: 'text' (str), 'trait' (str), 'answer' (str).

Returns

-------

scores : dict

A dictionary containing the MBTI scores for each trait.

"""

if len(responses) != 24:

raise ValueError("There must be exactly 24 responses.")

# Mapping each response to its corresponding dichotomy

# 'Yes' for the first trait in the dichotomy and 'No' for the second.

dichotomy_map = {

'E/I': [1, 0, 1, 0, 1, 0],

'S/N': [1, 0, 1, 0, 1, 0],

'T/F': [1, 0, 1, 0, 1, 0],

'J/P': [1, 0, 1, 0, 1, 0]

}

# Initial scores

scores = {'E': 0, 'I': 0, 'S': 0, 'N': 0, 'T': 0, 'F': 0, 'J': 0, 'P': 0}

# Iterate through the responses and update scores

for i, response in enumerate(responses):

if i < 6: # E/I questions

trait = 'E' if response == 'Yes' else 'I'

scores[trait] += dichotomy_map['E/I'][i % 6]

elif i < 12: # S/N questions

trait = 'S' if response == 'Yes' else 'N'

scores[trait] += dichotomy_map['S/N'][i % 6]

elif i < 18: # T/F questions

trait = 'T' if response == 'Yes' else 'F'

scores[trait] += dichotomy_map['T/F'][i % 6]

else: # J/P questions

trait = 'J' if response == 'Yes' else 'P'

scores[trait] += dichotomy_map['J/P'][i % 6]

return scores

def classic_mbti(responses):

"""

This function takes responses and determines the MBTI type using a

classic decision-tree approach.

Parameters

----------

responses : list

A list of responses to the MBTI questions. Each response is of type dict

and has the following keys: 'text' (str), 'trait' (str), 'answer' (str).

Returns

-------

mbti_type : str

The MBTI type.

"""

scores = calculate_mbti_scores(responses)

# Process the scores to determine MBTI type

mbti_type = ''

for trait_pair in ['EI', 'SN', 'TF', 'JP']:

trait1, trait2 = trait_pair

if scores[trait1] >= scores[trait2]:

mbti_type += trait1

else:

mbti_type += trait2

return mbti_type

LLM-powered Approach

With the LLM-based approach, we will use the same questions, but instead of using a dichotomy map, we will use the LLM to determine the MBTI type based on the responses to the questions. We will use the Haystack beta 2.0 framework to manage the backend logic and build a data pipeline that takes as input the responses, and incorporates an LLM through prompting to determine the MBTI type.

Installing Haystack

We can install Haystack beta 2.0 with pip:

pip install haystack-ai

Creating a data structure for the questions, traits and answers

Let’s begin by creating a data structure for the questions, traits and answers. We will save our questions into a dictionary with the keys: text, trait, answer. Below is an example:

questions = [

{"text": "Do you feel more energized when surrounded by people?", "trait": "E" , "answer": "Yes"},

{"text": "Do you often find solitude more refreshing than social gatherings?", "trait": "I" ,"answer": "No"},

{"text": "Do you focus more on the details and facts of your immediate surroundings?", "trait": "S", "answer": "Yes"},

{"text": "Are you more interested in exploring abstract theories and future possibilities?", "trait": "N", "answer": "No"},

{"text": "When making decisions, do you prioritize logic over personal considerations?", "trait": "T", "answer": "Yes"},

{"text": "Are your decisions often influenced by how they will affect others emotionally?", "trait": "F", "answer": "Yes"},

{"text": "Do you enjoy being spontaneous and keeping your options open?", "trait": "P", "answer": "No"},

{"text": "Do you find satisfaction in completing tasks and finalizing decisions?", "trait": "J", "answer": "No"},

{"text": "Do you prefer exploring various options before making a decision?", "trait": "P", "answer": "Yes"},

]

We can store each dictionary into a Haystack Document object and save all documents into a list of Haystack Documents:

for i, question in enumerate(questions):

question['meta'] = {

'trait': question['trait'],

'answer': responses[i]

}

documents = [Document(content=question["text"], meta=question["meta"]) for question in questions]

These documents can then be passed to the LLM through a Haystack pipeline.

Providing instructions to an LLM through prompting

We will use Haystack’s PromptBuilder from Haystack beta 2.0 version to provide instructions through a prompt template. Below is our template:

prompt_template = """

Determine the personality of someone using the Myers-Briggs Type Indicator (MBTI) test. In this test,

a user answers a series of questions using "Yes" and "No" responses. The questions are

labelled according to the trait, where the traits are:

E = Extraversion

I = Introversion

S = Sensing

N = Intuition

T = Thinking

F = Feeling

J = Judging

P = Perceiving

Please provide a concise explanation for your response and also provide insights into the personality type of the user.

In your description mention the defining characteristics of the personality type.

If the documents do not contain the answer to the question, say that ‘Answer is unknown.’

Context:

{% for doc in documents %}

Question: {{ doc.content }} Response: {{ doc.meta['answer'] }} Personality trait: {{doc.meta['trait']}} \n

{% endfor %};

Question: {{query}}

\n

"""

In the template above, we provided the LLM with information on what we want it to do, and we use Jinja to help it iterate through the questions and answers. Before we can provide this to an LLM, let’s build a pipeline that will take as input the responses and return the MBTI type.

Building a pipeline with Haystack

We will use Haystack’s Pipeline class to build our pipeline. In the pipeline below, we use the following components:

DocumentSplitter: This component splits the documents into smaller chunks. We set thesplit_lengthto 100 and thesplit_overlapto 5. This means that each document will be split into chunks of 100 characters, and each chunk will overlap with the previous chunk by 5 characters. This is useful for providing context to the LLM.PromptBuilder: This component takes the documents and builds a prompt using the template we defined above.OpenAIGenerator: This component takes the prompt and generates a response using the LLM. We use thegpt-4model and set thetemperatureto 0.1. Thetemperatureis a hyperparameter that controls the randomness of the predictions. A higher temperature will result in more randomness, while a lower temperature will result in less randomness.

We can then add components to the pipeline through the add_component method connect the components together using the pipeline.connect() method. The pipeline.run() method will run the pipeline and return the MBTI type.

from haystack import Pipeline

from haystack.components.preprocessors import DocumentSplitter

from haystack.components.builders.prompt_builder import PromptBuilder

from haystack.components.generators import OpenAIGenerator

from haystack import Document

splitter = DocumentSplitter(split_length=100, split_overlap=5)

prompt_builder = PromptBuilder(prompt_template)

llm = OpenAIGenerator(api_key=api_key,

model='gpt-4',

generation_kwargs={"temperature":0.1})

pipeline = Pipeline()

pipeline.add_component("splitter", splitter)

pipeline.add_component(name="prompt_builder", instance=prompt_builder)

pipeline.add_component(name="llm", instance=llm)

pipeline.connect("splitter.documents", "prompt_builder.documents")

pipeline.connect("prompt_builder", "llm")

We can then execute the pipeline as follows:

query = "Based on the responses, what is this user's Myers-Briggs personality type?"

answer = pipeline.run(data={'splitter': {'documents': documents}, "prompt_builder": {"query": query}})

In the code above, the pipeline.run() method is taking as input a dictionary with the keys splitter and prompt_builder. The splitter key has a value of a dictionary with the key documents and a value of the list of documents (these are the Haystack document objects with the question, trait and answers). The prompt_builder key has a value of a dictionary with the key query and a value of the query we want to ask the LLM.

Interesting findings within a dichotomy approach

The LLM decides the MBTI type based on the responses to other questions when a 50/50 split occurs in a given dichotomy

We found that the LLM-powered approach was able to determine the MBTI type with some degree of accuracy. We also found that the LLM was able to handle ambiguity and ambivert characteristics. For example, if a user responded “Yes” to 3 out of 6 questions in a given dichotomy, the LLM would take into account the nature of other questions and the user’s responses to determine personality characteristics. Below is an example.

Your MBTI type:

According to a deterministic approach: ESTJ.

According to an LLM-generated approach: The user's Myers-Briggs personality type is ENTP.

This is determined by tallying the number of responses that align with each trait. The user answered "Yes" to 3 Extraversion (E) questions and 3 Introversion (I) questions. However, the Extraversion trait is chosen because the user seems to be more energized by social interactions.

For the Sensing (S) and Intuition (N) traits, the user answered "Yes" to 3 Sensing questions and 3 Intuition questions. The Intuition trait is chosen because the user shows a preference for exploring abstract theories and future possibilities.

In the Thinking (T) and Feeling (F) category, the user answered "Yes" to 3 Thinking questions and 3 Feeling questions. The Thinking trait is chosen because the user tends to prioritize logic over personal considerations.

Finally, for the Judging (J) and Perceiving (P) traits, the user answered "Yes" to 3 Judging questions and 3 Perceiving questions. The Perceiving trait is chosen because the user enjoys being spontaneous and keeping options open.

ENTPs are known as "The Debaters" and are characterized by their quick thinking, intellectual nature, and assertiveness. They are often very charismatic and enjoy stimulating conversations. They are also known for their ability to think outside the box and see the big picture. However, they can also be argumentative and may struggle with routine tasks.

The LLM does not always produce the same reasoning or results - and was not able to successfully count results

Unfortunately, we found that the LLM did not always produce the same reasoning for a given MBTI type. Even when reducing the randomness of the predictions by setting the temperature to 0.1, we found that the LLM would sometimes produce different reasoning for the same MBTI type. Following the same example as above, we found that the LLM would sometimes produce the following reasoning:

According to a deterministic approach: ESTJ.

According to an LLM-generated approach: The user's Myers-Briggs personality type is ENFP.

This is determined by tallying the responses for each trait. The user answered "Yes" to 3 Extraversion (E) questions and 3 Introversion (I) questions. Since there is a tie, we will choose E because the user seems to enjoy social interactions more. The user answered "Yes" to 3 Sensing (S) questions and 4 Intuition (N) questions, so we choose N. The user answered "Yes" to 3 Thinking (T) questions and 4 Feeling (F) questions, so we choose F. The user answered "Yes" to 3 Judging (J) questions and 4 Perceiving (P) questions, so we choose P.

ENFPs are known as the "Inspirer" or "Champion". They are enthusiastic, creative, and sociable free spirits, who can always find a reason to smile. They are spontaneous, energetic, and enthusiastic, and they have a strong desire to inspire and motivate others. They are also very perceptive and intuitive and are often able to quickly and accurately assess where someone is coming from. ENFPs are also characterized by their incredible ability to relate to others.

Building a UI with Solara

We used Solara to build a simple UI that allows the user to answer the questions and see the results of the dichotomy approach and the LLM-powered approach. We defined the following components:

PersonalityQuiz: This component displays the question and allows the user to select “Yes” or “No” as a response. It contains the following methods:reset_quiz: This method resets the quiz to its initial state.handle_answer: This method handles the user’s response to the question.on_back: This method alllows the user to go back to the previous question.on_submit: This method defines the application’s behaviour when the user submits their responses.

QuestionComponent: This component displays the question and allows the user to select “Yes” or “No” as a response. It contains the following methods:on_click: This method handles the user’s response to the question - storing yes or no.

ResultsComponent: This component displays the results of the dichotomy approach and the LLM-powered approach.Topbar: This component displays the top bar of the application.Sidebar: This component displays the sidebar of the application.Page:

The Page component is used to define the layout of the application. We can then use the Page component to define the layout of the application.

@solara.component

def Page():

return solara.Column(

[

Topbar(), # This will place the Topbar at the top of the page

solara.Row([

Sidebar(),

PersonalityQuiz(),

])

],

style="height: 100vh;" # Set the height to fill the screen vertically

)

For more details, please review the app.py file in our open source repository

Deploying the application with Ploomber Cloud

We can now package our application into an app.py script, a Dockerfile and a requirements.txt file. We will then deploy it on Ploomber Cloud through their command line interface. With Ploomber Cloud, we can easily deploy our application on the cloud and share it with others, we can manage secrets and automate deployments through GitHub actions.

Sample Dockerfile

FROM python:3.11

COPY app.py app.py

COPY .env .env

COPY requirements.txt requirements.txt

RUN pip install torch==2.1.1 torchvision==0.16.1 --index-url https://download.pytorch.org/whl/cpu

RUN pip install -r requirements.txt

ENTRYPOINT ["solara", "run", "app.py", "--host=0.0.0.0", "--port=80"]

Our requirements.txt file contains the following:

haystack-ai==2.0.0b5

solara

python-dotenv

sentence-transformers>=2.2.0

moviepy

pydub

ploomber-cloud

Initialize deployment

You will need to create an account on Ploomber Cloud. You can do so here. You will also need to generate an API key on Ploomber Cloud under ‘Account’ in https://www.platform.ploomber.io/ You will also need to install the Ploomber Cloud CLI. You can do so as follows:

pip install ploomber-cloud

Connect your local computer to your Ploomber Cloud account by running the following command:

ploomber-cloud key

Paste your API key when prompted. You can then initialize your deployment as follows:

ploomber-cloud init

This will create a ploomber-cloud.json file in your current directory. It will have your app id and the type (docker).

Deploy your application

You can deploy your application as follows:

ploomber-cloud deploy

This will deploy your application on the cloud. You can then access your application via the URL provided in the output of the command above.

Conclusion

We found that the LLM-powered approach was able to determine the MBTI type with some degree of accuracy. We also found that the LLM was able to handle ambiguity and ambivert characteristics. However, we found that the LLM did not always produce the same reasoning for a given MBTI type. Even when reducing the randomness of the predictions by setting the temperature to 0.1, we found that the LLM would sometimes produce different reasoning for the same MBTI type. We also found that the LLM was not able to successfully count results. One area we can leverage is by combining a deterministic approach for more reliable results, along with an LLM approach to provide an explanation for what the traits mean. Another possibility is to extend the functionality of the application, by allowing the user to ask an open-ended question, and then using the LLM to generate a response based on the deterministic approach.