Overview of Outlines

Outlines is a Python library designed to simplify the usage of Large Language Models (LLMs) with structured generation. Structured generation is the process of taking the output of an LLM and transforming it into a more suitable format. This is very useful when you are using LLMs to generate any form of structured data. Here are a few reasons why you might want to use it:

- Structured output ensures that the generated text conforms to a specific format or schema, making it easier to integrate with other systems, APIs, or applications.

- Outputs generated in standardized formats such as JSON, CSV, etc can be easily parsed by automated scripts, algorithms, or data pipelines. This enables efficient extraction of relevant information and subsequent analysis.

- Organizing the information into well-defined fields makes it easier to understand the content and its context.

Main features

Now let’s discuss the key features provided by Outlines:

- JSON structured generation: Outlines can make any open-source model return a JSON object that follows a structure that is specified by the user. This functionality is useful when we require the model’s output to undergo downstream processing within our codebase, e.g.,

- Parse the answer (e.g. with Pydantic), store it somewhere, return it to a user, etc.

- Call a function with the result

- JSON mode for vLLM: Allows an LLM service to be deployed using the JSON structured output and vLLM.

- Make LLMs follow a Regex: This feature guarantees that the text generated by the LLM is a valid regular expression.

- Powerful prompt templating: Outlines simplifies prompt management with prompt functions. Prompt functions are Python functions containing prompt templates in their docstrings. Arguments of these functions correspond to prompt variables, and when invoked, they return the template populated with argument values.

To find out more about the features of Outlines, check out their documentation.

Installation

Outlines can be installed by running the following command:

pip install outlines

Outlines can also be deployed as an LLM service with vLLM and a FastAPI server. vLLM isn’t installed by default, so you’ll need to install it separately:

pip install outlines[serve]

Keep in mind that vLLM requires Linux and Python >=3.8. Furthermore, it requires a GPU with compute capability >=7.0 (e.g., V100, T4, RTX20xx, A100, L4, H100).

Finally, vLLM is compiled with CUDA 12.1, so you need to ensure that your machine is running such CUDA version. To check it, run:

nvcc --version

If you’re not running CUDA 12.1 you can either install a version of vLLM compiled with the CUDA version you’re running (see the installation instructions to learn more), or install CUDA 12.1.

For step-by-step instructions on installing vLLM, you can explore this blog post for a detailed guide.

Start an Outlines server

Once vLLM is installed you can start the server by running:

python -m outlines.serve.serve --model=<model_name>

Alternatively, you can install and run the server with Outlines' official Docker image using the command:

docker run -p 8000:8000 outlinesdev/outlines --model=<model_name>

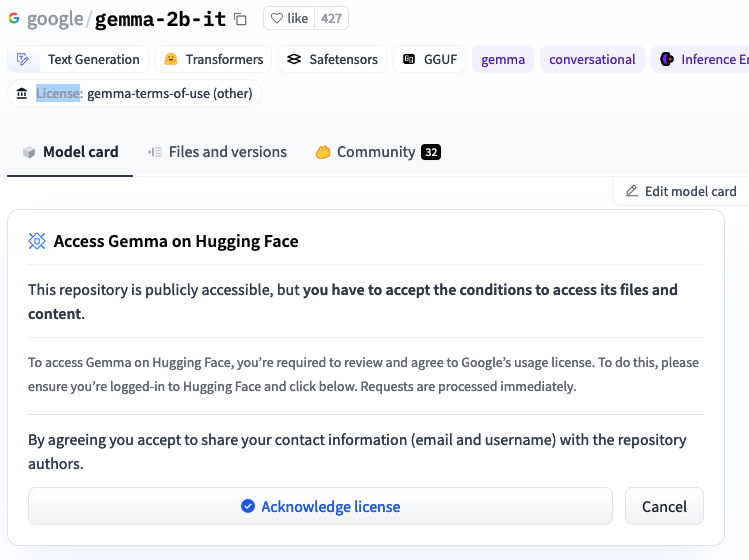

Let’s see an example of starting the server using the google/gemma-2b model. Note that some models, such as this require you to accept their license. Hence, you need to create a HuggingFace account, accept the model’s license, and generate a token.

For example, when opening google/gemma-2b on HuggingFace

(you need to be logged in), you’ll see this:

Once you accept the license, head over to the tokens section, and grab a token, then, before starting vLLM, set the token as follows:

export HF_TOKEN=YOURTOKEN

Once the token is set, you can start the server.

python -m "outlines.serve.serve --model google/gemma-2b-it

Making requests

Once the server is up and running, you’re ready to send requests. You can query the model by providing a prompt along with either a JSON Schema specification or a Regex pattern.

Let’s look at an example using the JSON Schema specification. We’ll use the google/gemma-2b model and the Python requests library:

import json

import requests

# change for your host

OUTLINES_HOST = "https://odd-disk-6303.ploomber.app"

url = f"{OUTLINES_HOST}/generate"

schema = {

"type": "object",

"properties": {

"a": {

"type": "integer"

},

"b": {

"type": "integer"

}

},

"required": ["a", "b"]

}

headers = {"Content-Type": "application/json"}

data = {

"prompt": "Return two integers named a and b respectively. a is odd and b even.",

"schema": schema

}

response = requests.post(url, headers=headers, data=json.dumps(data))

print(response.json()["text"])

The output generated was as follows:

['Return two integers named a and b respectively. a is odd and b even.{"a": 1, "b": 2}']

Deploying on Ploomber Cloud

To avoid the hassle of configuration, you can deploy vLLM on Ploomber Cloud with just one click.

Start by creating an account on Ploomber Cloud.

For a sample application, refer to the example repository. The deployment steps are similar to those outlined in the vLLM deployment guide.

Generate a zip file from the Dockerfile and the dependencies file. Login to your Ploomber Cloud account and follow the steps here

to deploy it as a Docker application.

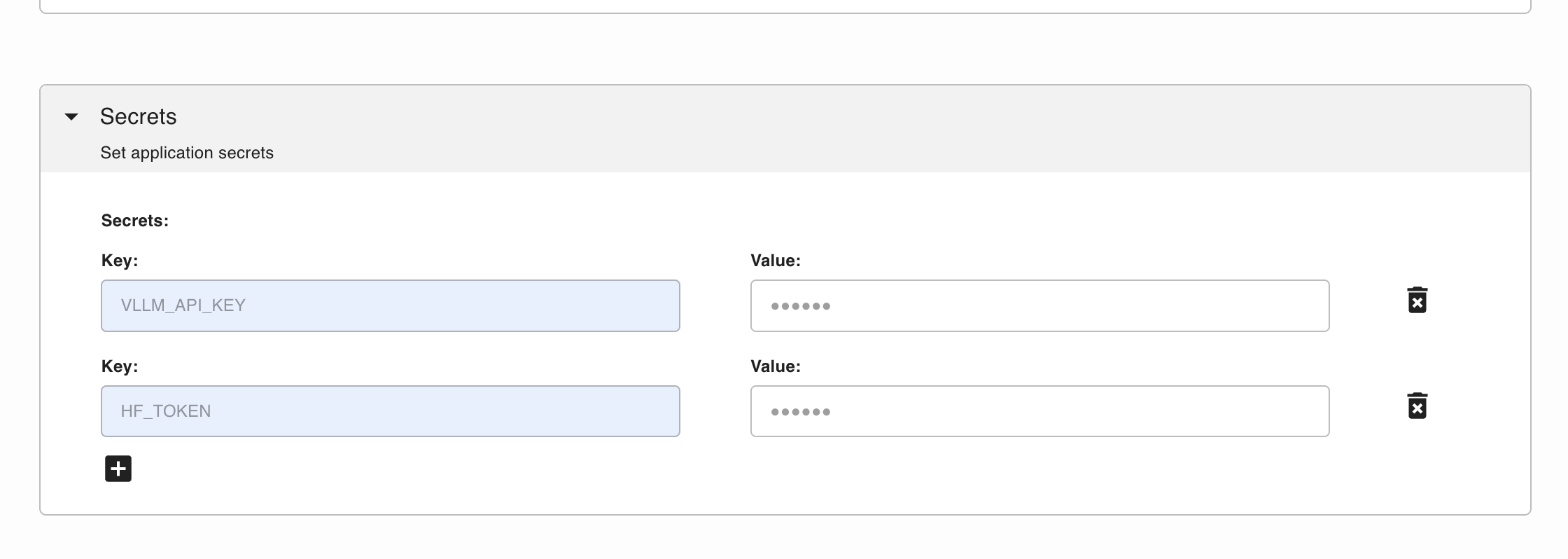

If your model requires license acceptance, you will need to provide a valid HF_TOKEN in the Secrets section for vLLM to download the weights.

Additionally, you can protect your server by setting the VLLM_API_KEY secret, which you can generate with the following command:

python -c 'import secrets; print(secrets.token_urlsafe())'

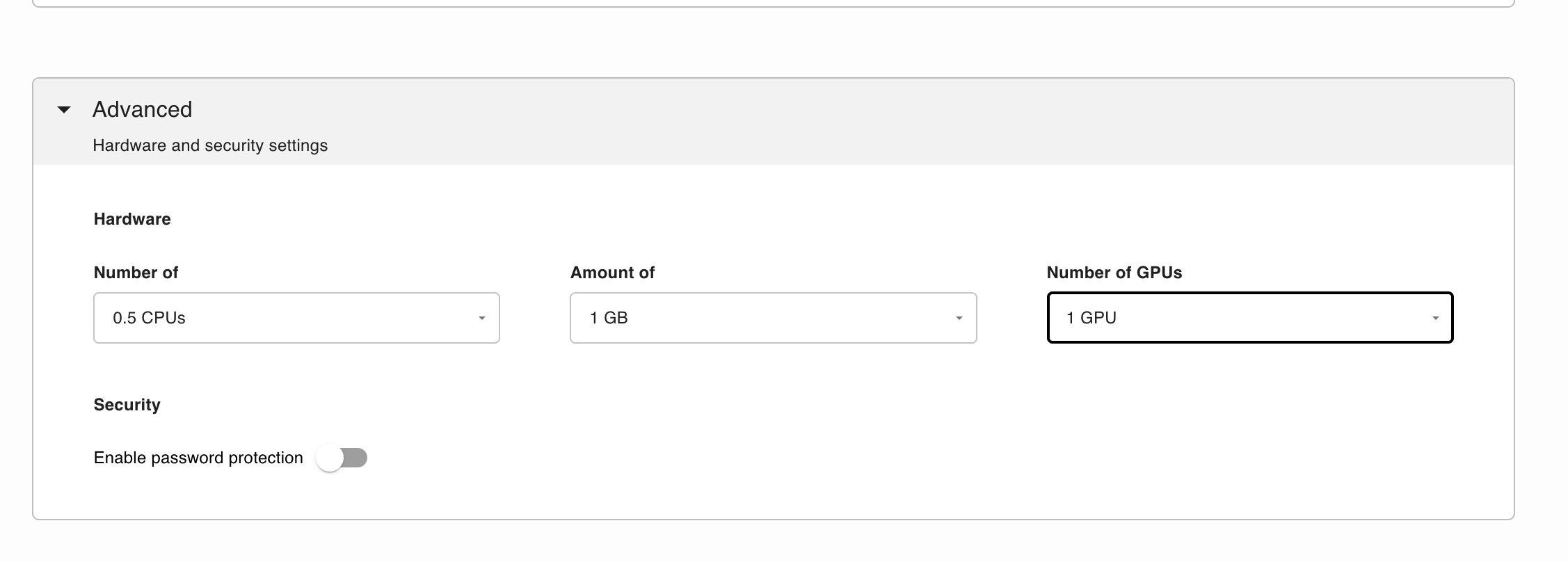

You also need to select GPU for the deployment to work:

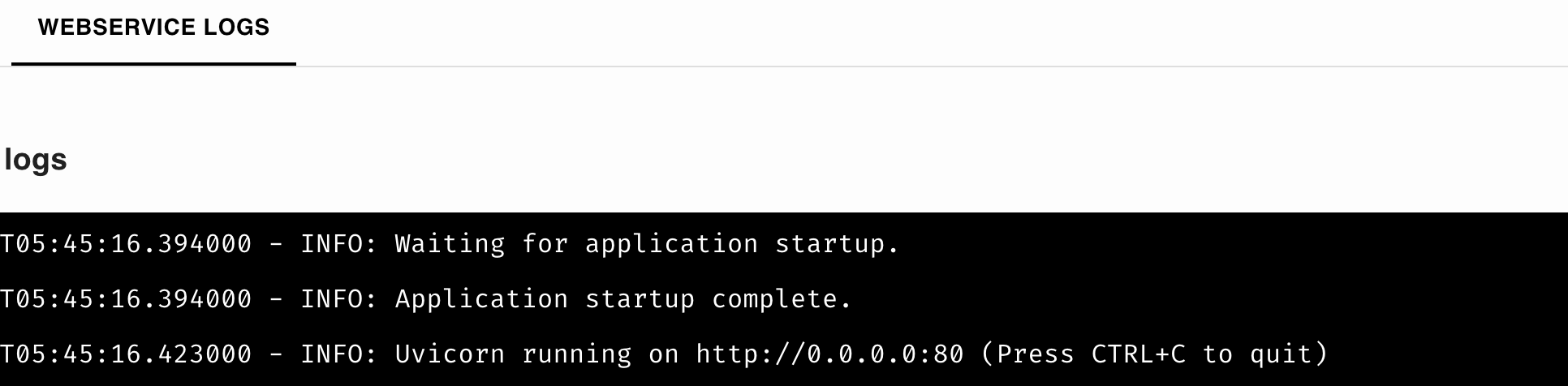

Once the deployment is complete, you should see the below logs:

After the deployment, you have the option to either send cURL requests or write a Python script using the requests library to interact with the model.

Refer to the Serve with vLLM guide to learn more.

Conclusion

Let’s quickly recap the key points discussed in the post:

Outlinesis a powerful Python library that enables structured output generation from LLMs.- Structured output enables easy integration with other systems or APIs, improves data accessibility, and simplifies data analysis through standardized formats like JSON and CSV.

- The library can be served via

vLLM, and the model can be accessed throughcURLrequests or the Pythonrequestslibrary. - If you lack a GPU or prefer avoiding configuration hassles, you can opt for deployment in Ploomber Cloud.