In a previous post, we demonstrated the basics of Presidio, an open-source framework from Microsoft to anonymize PII (Personal Identifiable Information) data. We showed how to integrate Presidio with OpenAI to ensure that no PII data is sent to OpenAI’s servers. Here’s the code:

from presidio_analyzer import AnalyzerEngine

from presidio_anonymizer import AnonymizerEngine

from openai import OpenAI

client = OpenAI()

analyzer = AnalyzerEngine()

anonymizer = AnonymizerEngine()

def anonymize_message(message):

"""Anonymize a message

"""

text = message["content"]

results = analyzer.analyze(text=text, language='en')

anonymized = anonymizer.anonymize(text=text, analyzer_results=results).text

return {"role": message["role"], "content": anonymized}

def chat_completions_create(user_messages):

"""Wrapper for client.chat.completions.create which anonymizes messages

"""

# anonymize messages so no PII data is leaked

anonymized_messages = [anonymize_message(message) for message in user_messages]

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful assistant that can write emails."},

] + anonymized_messages

)

return response

user_messages = [{

"role": "user",

"content": """

Draft an email to person@corporation.com, mention that I've been trying to

reach at 212-555-5555 without success and we'd like her to reach out asap

"""

}]

print(chat_completions_create(user_messages).choices[0].message.content)

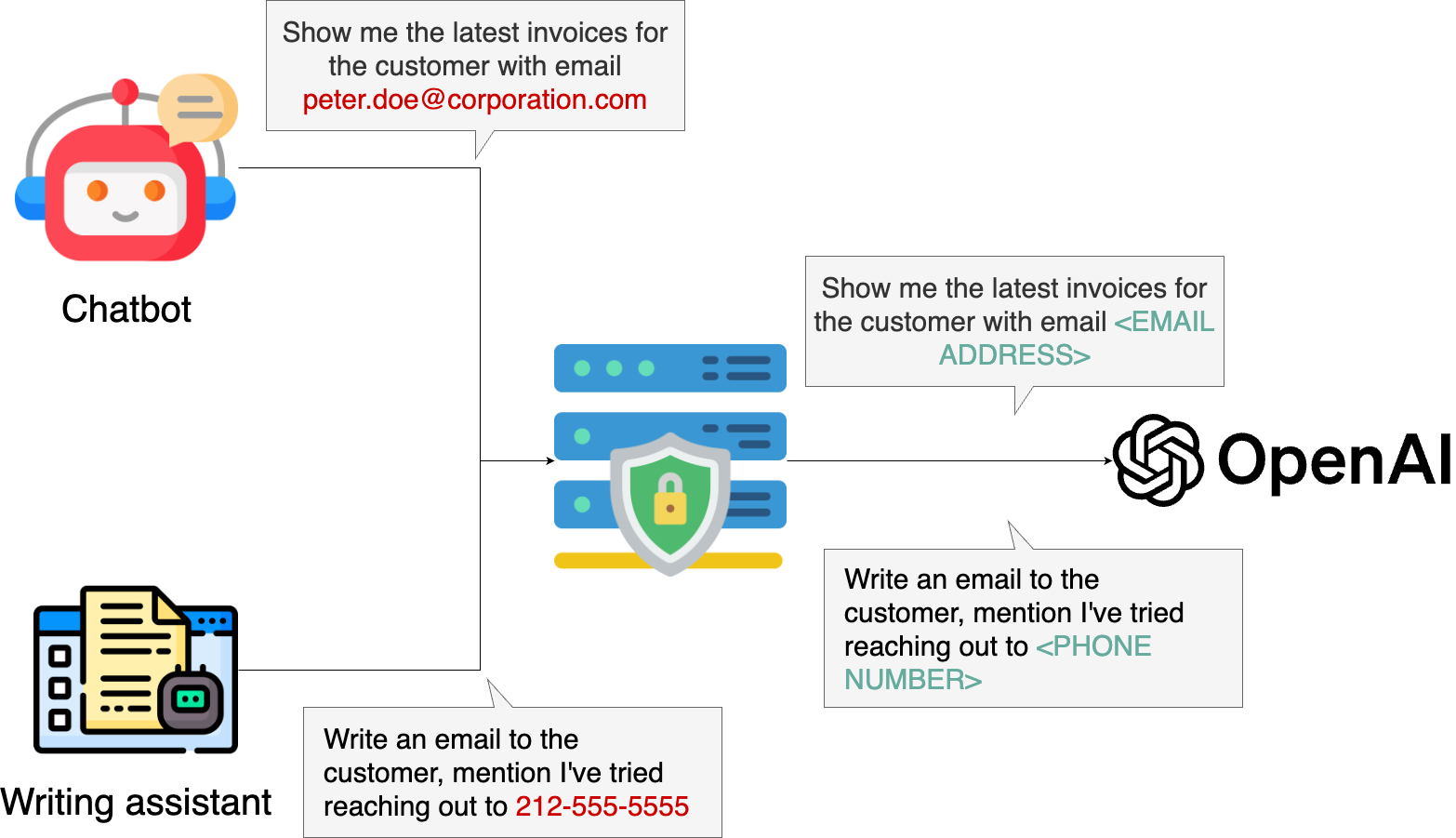

While this is a good first approach, it has a significant drawback for organizational implementation: we must ensure that every application calling OpenAI’s API correctly implements and configures Presidio. This creates a substantial operational burden since we have to carefully review the code to ensure compliance with every update to any application using OpenAI’s API.

Centralizing Data Privacy Compliance with a Reverse Proxy

A better approach is to create a reverse proxy that intercepts all OpenAI API calls: the reverse proxy is a server that intercepts all requests, removes PII information, and forwards them to OpenAI’s API.

We’ll implement this using FastAPI and Presidio. Let’s review the code.

A Reverse Proxy Using FastAPI

First, let’s look at the core proxy functionality. We set up a FastAPI middleware that intercepts requests and handles them based on their path:

@app.middleware("http")

async def reverse_proxy(request: Request, call_next):

path = request.url.path

# For non-intercepted paths, pass through to OpenAI

if path not in INTERCEPT_PATHS:

url = urljoin(TARGET_URL, path)

headers = {k: v for k, v in request.headers.items() if k.lower() != "host"}

response = await http_client.request(

method=request.method,

url=url,

headers=headers,

content=await request.body(),

)

return Response(

content=response.content,

status_code=response.status_code,

headers=dict(response.headers)

)

For paths we want to intercept (like /v1/chat/completions), we preprocess the request to anonymize sensitive data before forwarding it:

async def preprocess_request(request: Request):

body = await request.body()

body_json = json.loads(body)

# Anonymize message contents if present

if "messages" in body_json:

for message in body_json["messages"]:

if "content" in message:

message["content"] = anonymize_text(message["content"])

return json.dumps(body_json).encode()

The anonymization is handled by Presidio, which identifies and removes personally identifiable information:

def anonymize_text(text: str) -> str:

# Analyze text to find entities

results = analyzer.analyze(text=text, entities=None, language="en")

# Anonymize the identified entities

anonymized = anonymizer.anonymize(text=text, analyzer_results=results)

return anonymized.text

Example Usage

With this setup, any application in our organization can use OpenAI’s API through our proxy server by simply changing the base URL in their client configuration:

from openai import OpenAI

client = OpenAI(

base_url="http://proxy.yourcompany.com/v1", # Point to our proxy instead of api.openai.com

)

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{

"role": "user",

"content": "Hello, write an email to the customer with email address johndoe@gmail.com",

},

],

)

print(response.choices[0].message.content)

The proxy automatically sanitizes all requests, ensuring consistent privacy protection across our entire organization without requiring changes to individual applications.

Here’s the body that our proxy receives (with PII):

Original request body: {

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello, write an email to the customer with email address johndoe@gmail.com"

}

],

"model": "gpt-4o-mini"

}

And here’s the body that is sent to OpenAI (with redacted PII):

Anonymized request body: {

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello, write an email to the customer with email address <EMAIL_ADDRESS>"

}

],

"model": "gpt-4o-mini"

}

Deployment

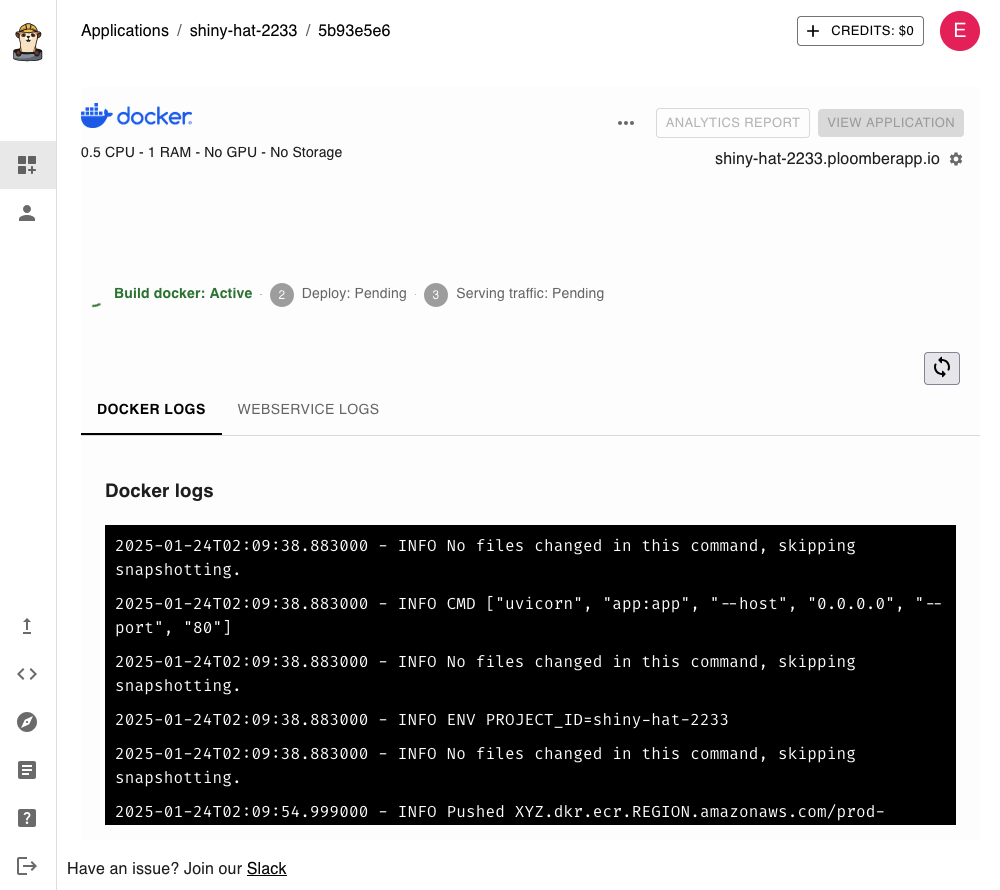

To deploy this, you’ll need a Ploomber Cloud account. Once you have one, generate an API key.

Then, install the CLI and set your key:

pip install ploomber-cloud

ploomber-cloud key YOUR-PLOOMBER-CLOUD-KEY

Download the example code and deploy:

# you'll need to confirm by pressing enter

ploomber-cloud examples fastapi/openai-presidio

# move to the code directory

cd openai-presidio

# init the project (and press enter to confirm)

ploomber-cloud init

# deploy project

ploomber-cloud deploy

The command line will print a URL to track deployment progress, open it:

After 1-2 minutes, deployment will finish, and you’ll be able to click on VIEW APPLICATION. If everything went correctly, you should

see the following message:

Proxy server is running

Now, let’s test our reverse proxy. Open a Python console and execute the following, but remember to set OPENAI_API_KEY and BASE_URL. The base URL is the one displayed in the Ploomber Cloud dashboard, in my case: https://shrill-sun-9295.ploomberapp.io.

from openai import OpenAI

# replace with an actual openai api key

OPENAI_API_KEY = "YOUR-OPENAI-API-KEY"

# replace with your ploomber cloud URL

BASE_URL = "https://shiny-hat-2233.ploomberapp.io"

# Initialize client with custom base URL

client = OpenAI(

api_key=OPENAI_API_KEY,

base_url=f"{BASE_URL}/v1",

)

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{

"role": "user",

"content": "Hello, write an email to the customer with email address johndoe@gmail.com",

},

],

)

print(response.choices[0].message.content)

It should work transparently, as if you were using the OpenAI API directly. However, the request is intercepted by our proxy. If you look at Ploomber Cloud’s console, you’ll see the logs:

Original request body: {

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello, write an email to the customer with email address johndoe@gmail.com"

}

],

"model": "gpt-4o-mini"

}

Anonymized request body: {

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello, write an email to the customer with email address <EMAIL_ADDRESS>"

}

],

"model": "gpt-4o-mini"

}

The Original request body is what you sent to the API. The reverse proxy intercepts

it, uses Presidio to redact PII data, and generates the Anonymized request body,

which is sent to OpenAI.

Limitations

The current implementation is basic - it only implements PII removal in the

/chat/completions/ endpoint, so more work is required to support other endpoints.

Furthermore, we’re using Presidio’s defaults, which might not be applicable to your company’s data policy. Check out our blog on Presidio to learn how to customize rules.

Finally, there is some information loss when redacting PII data. In our test,

we sent an email address, but OpenAI only sees the <EMAIL_ADDRESS> placeholder. If

our prompt included more than one email address, it would just see the <EMAIL_ADDRESS> placeholder multiple times, which might cause issues for the model. A better alternative

would be to generate unique identifiers so the model knows that each one is a different

address (without disclosing the actual email addresses). Additionally, it would be beneficial to have

round-trip conversion so users would see the actual email addresses in the model’s responses.

If you’re interested in deploying an enterprise-grade proxy at your company, contact us. Our enterprise-grade solution solves all of the previously mentioned issues and offers advanced features like a user interface to customize PII detection rules, advanced logging for auditing, and more.