vLLM is one of the most exciting LLM projects today. With over 200k monthly downloads, and a permissive Apache 2.0 License, vLLM is becoming an increasingly popular way to serve LLMs at scale.

In this tutorial, I’ll show you how you can configure and run vLLM to serve open-source LLMs in production.

Getting started with vLLM

For those new to vLLM, let’s first explain what vLLM is.

vLLM is an open-source project that allows you to do LLM inference and serving. Inference means that you can download model weights and pass them to vLLM to perform inference via their Python API; here’s an example from their documentation:

from vllm import LLM, SamplingParams

prompts = [

"Hello, my name is",

"The president of the United States is",

"The capital of France is",

"The future of AI is",

]

# initialize

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

llm = LLM(model="facebook/opt-125m")

# perform the inference

outputs = llm.generate(prompts, sampling_params)

# print outputs

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")

In this regard, vLLM is similar to Hugginface’s transformers library, as a comparison, here’s how you do inference on the same model using transformers:

from transformers import pipeline

generator = pipeline('text-generation', model="facebook/opt-125m")

generator("Hello, my name is")

Running inference using the Python API, as I showed in the previous example, is fine for quick testing, but in a production setting, we want to offer a simple interface to interact with the model so other parts of the system can call it easily, a great solution is to expose our model via an API.

Let’s say you found out about vLLM, and now you want to build a REST API to serve a model, you might build a Flask app like this:

from flask import Flask, request, jsonify

from vllm import LLM, SamplingParams

app = Flask(__name__)

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

llm = LLM(model="facebook/opt-125m")

@app.route('/generate', methods=['POST'])

def generate():

data = request.get_json()

prompts = data.get('prompts', [])

outputs = llm.generate(prompts, sampling_params)

# Prepare the outputs.

results = []

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

results.append({

'prompt': prompt,

'generated_text': generated_text

})

return jsonify(results)

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000)

Our users can now consume our model by hitting the /generate endpoint. However,

this has many limitations: if many users hit the endpoint simultaneously,

Flask will attempt to run them concurrently and crash. We also need to implement our

authentication mechanism. Finally, interoperability is limited; users must read our

model’s REST API documentation to interact with our model.

This is where the serving part of vLLM shines since it provides all of this for us. If vLLM’s Python API is akin to the transformers library, vLLM’s server is akin to TGI.

Now that we have explained the basics of vLLM; let’s install it!

Installing vLLM

Installing vLLM is simple:

pip install vllm

Keep in mind that vLLM requires Linux and Python >=3.8. Furthermore, it requires a GPU with compute capability >=7.0 (e.g., V100, T4, RTX20xx, A100, L4, H100).

Finally, vLLM is compiled with CUDA 12.1, so you need to ensure that your machine is running such CUDA version. To check it, run:

nvcc --version

If you’re not running CUDA 12.1 you can either install a version of vLLM compiled with the CUDA version you’re running (see the installation instructions to learn more), or install CUDA 12.1.

Checking your installation

Before continuing, I’d advise you to check your installation by running some sanity checks:

# ensure torch is working with CUDA, this should print: True

python -c 'import torch; print(torch.cuda.is_available())'

Now, store the following in a check-vllm.py file:

from vllm import LLM, SamplingParams

prompts = [

"Mexico is famous for ",

"The largest country in the world is ",

]

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

llm = LLM(model="facebook/opt-125m")

responses = llm.generate(prompts, sampling_params)

for response in responses:

print(response.outputs[0].text)

And run the script:

python check-vllm.py

After the model is loaded, you’ll see some output; in my case, I got this:

~~national~~ cultural and artistic art. They've already worked with him.

~~the country~~ a capitalist system with the highest GDP per capita in the world

Starting the vLLM server

Now that we have vLLM installed, let’s start the server. The basic command is as follows:

python -m vllm.entrypoints.openai.api_server --model=MODELTORUN

Where MODELTORUN is the model you want to serve,

for example, to serve google/gemma-2b.

python -m vllm.entrypoints.openai.api_server --model=google/gemma-2b

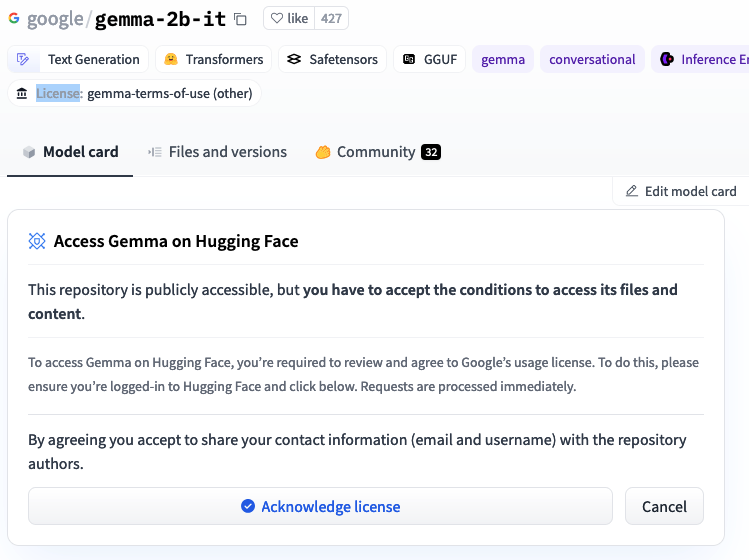

Note that some models, such as google/gemma-2b require you to accept their license,

hence, you need to create a HuggingFace account, accept the model’s license, and generate

a token.

For example, when opening google/gemma-2b on HugginFace

(you need to be logged in), you’ll see this:

Once you accept the license, head over to the tokens section, and grab a token, then, before starting vLLM, set the token as follows:

export HF_TOKEN=YOURTOKEN

Once the token is set, you can start the server.

python -m vllm.entrypoints.openai.api_server --model=google/gemma-2b

Note that the token is required even if you downloaded the weights. Otherwise you’ll get the following error:

File "/opt/conda/lib/python3.10/site-packages/huggingface_hub/hf_file_system.py", line 863, in _raise_file_not_found

raise FileNotFoundError(msg) from err

FileNotFoundError: google/gemma-2b (repository not found)

Setting the dtype

One important setting to consider is dtype, which controls the data type for the

model weights. You might need to tweak this parameter depending on your GPU, for

example, trying to run google/gemma-2b:

# --dtype=auto is the default value

python -m vllm.entrypoints.openai.api_server --model=google/gemma-2b --dtype=auto

On an NVIDIA Tesla T4 yields the following error:

ValueError: Bfloat16 is only supported on GPUs with compute capability of at least 8.0.

Your Tesla T4 GPU has compute capability 7.5. You can use float16 instead by explicitly

setting the`dtype` flag in CLI, for example: --dtype=half.

Changing the --dtype flag allows us to run the model on a T4:

python -m vllm.entrypoints.openai.api_server --model=google/gemma-2b --dtype=half

If this is the first time you start vLLM with the passed --model, it’ll take a few

minutes since it has to download the weights. Further initializations will be faster

since weights are stored in the ~/.cache directory; however, since the model

has to load into memory, it’ll still take some time to load (depending on the

model size).

If you see a message like this:

INFO: Started server process [428]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:80 (Press CTRL+C to quit)

vLLM is ready to accept requests!

Making requests

Once the server is running, you can make requests; here’s an example using

google/gemma-2b and the Python requests library:

# remember to run: pip install requests

import requests

import json

# change for your host

VLLM_HOST = "https://autumn-snow-1380.ploomber.app"

url = f"{VLLM_HOST}/v1/completions"

headers = {"Content-Type": "application/json"}

data = {

"model": "google/gemma-2b",

"prompt": "JupySQL is",

"max_tokens": 100,

"temperature": 0

}

response = requests.post(url, headers=headers, data=json.dumps(data))

print(response.json()["choices"][0]["text"])

This is the response that I got:

JupySQL is a Python library that allows you to create and run SQL queries in Jupyter notebooks. It is a powerful tool for data analysis and visualization, and can be used to explore and manipulate large datasets.

How does JupySQL work?

JupySQL works by connecting to a database server and executing SQL queries. It supports a wide range of databases, including MySQL, PostgreSQL, and SQLite.

Once you have connected to a database, you can create and run SQL queries in

Using the OpenAI client

vLLM exposes an API that mimics OpenAI’s one; which implies that you can use OpenAI’s Python package but direct calls to your vLLM server. Let’s see an example:

# NOTE: remember to run: pip install openai

from openai import OpenAI

# we haven't configured authentication, we pass a dummy value

openai_api_key = "EMPTY"

# modify this value to match your host, remember to add /v1 at the end

openai_api_base = "https://autumn-snow-1380.ploomber.app/v1"

client = OpenAI(

api_key=openai_api_key,

base_url=openai_api_base,

)

completion = client.completions.create(model="google/gemma-2b",

prompt="JupySQL is",

max_tokens=20)

print(completion.choices[0].text)

I got the following output:

a powerful SQL editor and IDE. It integrates with Google Jupyter Notebook,

which allows users to create and

Using the chat API

The previous example used the completions API; but you might be more familiar with the

chat API. Note that if you use the chat API, you must ensure that you use an instruction-tuned model. google/gemma-2b is not tuned for instructions; let’s instead use

google/gemma-2b-it, let’s start our vLLM server to use such model:

python -m vllm.entrypoints.openai.api_server \

--host 0.0.0.0 --port 80 \

--model google/gemma-2b \

--dtype=half

Now we can use the client.chat.completions.create function:

# NOTE: remember to run: pip install openai

from openai import OpenAI

openai_api_key = "EMPTY"

openai_api_base = "https://autumn-snow-1380.ploomber.app/v1"

client = OpenAI(

api_key=openai_api_key,

base_url=openai_api_base,

)

chat_response = client.chat.completions.create(

model="google/gemma-2b-it",

messages=[

{"role": "user", "content": "Tell me in one sentence what Mexico is famous for"},

]

)

print(chat_response.choices[0].message.content)

Output:

Mexico is known for its rich culture, vibrant cities, stunning natural beauty,

and delicious cuisine.

Sounds accurate!

If you’ve used OpenAI’s API before, you might remember that the messages argument

usually contains some messages with {"role": "system", "content": ...}:

chat_response = client.chat.completions.create(

model="google/gemma-2b-it",

messages=[

{"role": "system", "content": "You're a helful assistant."},

{"role": "user", "content": "Tell me in one sentence what Mexico is famous for"},

]

However, some models do not support the system role, for example, google/gemma-2b-it returns the following:

BadRequestError: Error code: 400 - {'object': 'error', 'message': 'System role not

supported', 'type': 'BadRequestError', 'param': None, 'code': 400}

Check your model’s documentation to know how to use the chat API.

Security settings

By default, your server won’t have any authentication. If you’re planning to expose your server to the internet, ensure you set an API key; you can generate one as follows:

export VLLM_API_KEY=$(python -c 'import secrets; print(secrets.token_urlsafe())')

# print the API key

echo $VLLM_API_KEY

And start vLLM:

python -m vllm.entrypoints.openai.api_server --model google/gemma-2b-it --dtype=half

Now, our server will be protected, and all requests that don’t have the API

key will be rejected. Note that in the previous command, we did not pass --api-key because vLLM

will automatically read the VLLM_API_KEY environment variable.

Test that your server has API key authentication by making a call using any of the earlier Python snippets, you’ll see the following error:

No key: `AuthenticationError: Error code: 401 - {'error': 'Unauthorized'}`

To fix this, initialize the OpenAI client with the correct API key:

from openai import OpenAI

openai_api_key = "THE_ACTUAL_API_KEY"

openai_api_base = "https://autumn-snow-1380.ploomber.app/v1"

client = OpenAI(

api_key=openai_api_key,

base_url=openai_api_base,

)

Another essential security requirement is to serve your API via HTTPS; however, this requires extra configuration, such as getting a TLS certificate. If you want to skip all this headache, skip to the final section, where we’ll show a one-click solution for securely deploying a vLLM server.

Considerations for a production deployment

Here are some considerations for a production deployment:

When deploying vLLM, you must ensure that the API restarts if

it crashes (or if the physical server is restarted). You can do so with tools such as

systemd.

To make your deployment more portable, we recommend using docker (more in the next

section). Also, ensure to pin all Python dependencies so upgrades

don’t break your installation (e.g., using pip freeze).

Using PyTorch’s docker image

We recommend using PyTorch’s official Docker image

since it already comes with torch and CUDA drivers installed.

Here’s a sample Dockerfile you can use:

FROM pytorch/pytorch:2.1.2-cuda12.1-cudnn8-devel

WORKDIR /srv

RUN pip install vllm==0.3.3 --no-cache-dir

# if the model you want to serve requires you to accept the license terms,

# you must pass a HF_TOKEN environment variable, also ensure to pass a VLLM_API_KEY

# environment variable to authenticate your API

ENTRYPOINT ["python", "-m", "vllm.entrypoints.openai.api_server", \

"--host", "0.0.0.0", "--port", "80", \

"--model", "google/gemma-2b-it", \

# depending on your GPU, you might or might not need to pass --dtype

"--dtype=half"]

Cautionary tale about a bug in the transformers==4.39.1 package

tl;dr; when installing vLLM in the official PyTorch docker image, ensure you use

the image with the correct PyTorch version. To do so, check the corresponding

pyproject.toml file

While developing this guide, we encountered a bug in the transformers package. We

wrote a Dockerfile that used the torch==2.2.2 (the most recent version at this time of

writing), and then installed vllm==0.3.3:

FROM pytorch/pytorch:2.2.2-cuda12.1-cudnn8-devel

RUN pip install vllm==0.3.3

However, when starting the vLLM server, we encountered the following error:

File /opt/conda/lib/python3.10/site-packages/transformers/utils/generic.py:478

475 return output_type(**dict(zip(context, values)))

477 if version.parse(get_torch_version()) >= version.parse("2.2"):

--> 478 _torch_pytree.register_pytree_node(

479 ModelOutput,

480 _model_output_flatten,

481 partial(_model_output_unflatten, output_type=ModelOutput),

482 serialized_type_name=f"{ModelOutput.__module__}.{ModelOutput.__name__}",

483 )

484 else:

485 _torch_pytree._register_pytree_node(

486 ModelOutput,

487 _model_output_flatten,

488 partial(_model_output_unflatten, output_type=ModelOutput),

489 )

AttributeError: module 'torch.utils._pytree' has no attribute 'register_pytree_node'

Upon further investigation, we realized that the problem is in the transformers package,

specifically, in the _is_package_available function..

This function determines the current torch version, which is used in several parts

of the codebase. Even though, vLLM does not use transformers for inference, it seems

to use it for loading model configuration parameters. The problem that the

transformers library uses a method that might return an incorrect version.

In our case, the Docker image had torch==2.2.2, but since vllm==0.3.3 requires

pyotrch==2.1.2, running pip install vllm==0.3.3 downgraded PyTorch to version 2.1.2,

however, transformers thought it still had torch==2.2.2, crashing execution.

This happened with transformers==4.39.1, so it might be fixed in future versions.

Deploying on Ploomber Cloud

If you want to skip the configuration headache, you can deploy vLLM on Ploomber Cloud with one click. We ensure that:

- All the proper CUDA drivers are installed

- Optimize the hardware vLLM runs on to maximize efficiency

- Provide you with a TLS certificate to serve over HTTPS

- You can stop the server at any time to save costs

To learn more, check our documentation